Examine Data

However, if a machine studying model is evaluated in cross-validation, conventional parametric tests will produce overly optimistic results. This is as a result of individual errors between cross-validation folds are not independent of one another since when a topic is in a training set, it’s going to have an effect on the errors of the topics within the check set. Thus, a parametric null-distribution assuming independence between samples might be too slim and due to this fact producing overly optimistic p-values. The recommended strategy to check the statistical significance of predictions in a cross-validation setting is to use a permutation take a look at (Golland and Fischl 2003; Noirhomme et al. 2014).

This is because machine studying fashions can capture info in the information that cannot be captured and removed utilizing OLS. Therefore, even after adjustment, machine learning models could make predictions based on the results of confounding variables. The most typical way to control for confounds in neuroimaging is to adjust enter variables (e.g., voxels) for confounds utilizing linear regression before they’re used as enter to a machine learning analysis (Snoek et al. 2019). In the case of categorical confounds, this is equal to centering each class by its imply, thus the typical value of each group with respect to the confounding variable will be the identical. In the case of continuous confounds, the impact on enter variables is usually estimated using an strange least squares regression.

Coping With Extraneous And Confounding Variables In Analysis

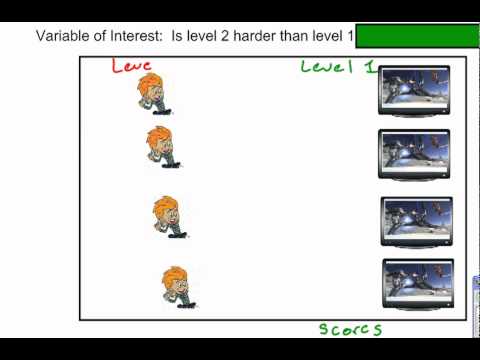

Anything could occur to the test subject within the “between” period so this doesn’t make for perfect immunity from confounding variables. To estimate the impact of X on Y, the statistician must suppress the results of extraneous variables that affect both X and Y. We say that X and Y are confounded by some other variable Z whenever Z causally influences each X and Y. A confounding variable is carefully related to each the unbiased and dependent variables in a research.

Support vector machines optimize a hinge loss, which is extra strong to extreme values than a squared loss used for enter adjustment. Therefore, the presence of outliers within the data will result in improper enter adjustment that can be exploited by SVM. Studies utilizing penalized linear or logistic regression (i.e., lasso, ridge, elastic-net) and classical linear Gaussian course of modesl should not be affected by these confounds since these models are not extra strong to outliers than OLS regression. In a regression setting, there are a number of equivalent ways to estimate the proportion of variance of the outcome explained by machine studying predictions that can not be explained by the effect of confounds. One is to estimate the partial correlation between model predictions and consequence controlling for the impact of confounding variables. Machine learning predictive fashions are now commonly used in medical neuroimaging analysis with a promise to be helpful for disease prognosis, predicting prognosis or treatment response (Wolfers et al. 2015).